Hi, I'm Nathalie, an AI Engineer at Ridge-i Inc. For this post, I will briefly introduce FluentD as a tool for logging with docker, and why it's important to keep logging simple to easily scale when working with containers.

Introduction

Logging is important to track events and debug once the program starts. As opposed to printing, you can put severity into logs, and save those logs to files.

So, if you are working on systems, all these applications write logs to files, which may get troublesome if the disk gets full. You can program applications to send logs to the centralized location but having to duplicate this implementation on several applications is not efficient.

These two important characteristics of an effective logger: standardized and centralized, can be achieved with the help of log collectors. There are quite a few log collectors out there, but in this blog, we will focus on FluentD.

Reason why I chose FluentD over other options is because FluentD is open-sourced, ruby-based, and has an excellent library of plugins which makes it easy to work with in most of the infrastructures. In comparison with other log collectors, FluentD is easy to understand, to setup, and lightweight. It consumes about 20-40MB only, which can be a crucial issue if you are working on a RAM-constrained large-cluster environments. Due to its flexibility and modularity, it’s become quite popular with companies as seen from their testimonials page.

Now, let’s describe what standardized and centralized logging is.

Standardized logging

Let’s say one of the projects in your company uses log system A. It would not be ideal to use log system A in every one of the projects because if the support for log system A gets depreciated, an update to each of the projects would be necessary.

To avoid this, in Linux OS, stdout is the default file descriptor where processes write. Instead of writing logs to a file, it goes to stdout which prevents disk storage problems from occurring. Using stdout enables Linux processes to have a simple standardized logic about where logs are being sent.

When processes run in containers, docker gathers stdout logs and manages them including the file log lifecycles.

For example, if we run a container, logs from it are printed on the terminal.

And if we check the docker log for the same container, exact output is displayed.

This is because docker, by default, reads from stdout and saves them as a log file in json format.

To locate the log file, run docker system info, and look for Docker Root Dir. It is where all docker data including logs are saved. It’s /var/lib/docker by default. You can mount the folder using docker run -it -v /var/lib/docker:/var/lib/docker redis sh Running ls /var/lib/docker/containers will give you the list of containers. And in each of this container directory, you can find a file with extension .log, containing the logs for the container. So, if you navigate to the container running redis, the log file should output the same thing from stdout.

In docker, you can change the logger driver from json file to database and so on, but it’s best to keep it as it is, more particularly, if you are working with Kubernetes so that implementation and maintenance are simple.

Centralized logging

Centralized logging is about bringing all the logs distributed from containers and hosts to one specific location.

With application writing to stdout, and docker reading stdout, we just need to configure a tool to bring it all together in one centralized location before docker cleans it up. As previously mentioned, we will use FluentD for this.

Demo with FluentD

In my folder, I have a docker-compose.yaml, fluentd.conf, and a folder where I want FluentD to move the log files.

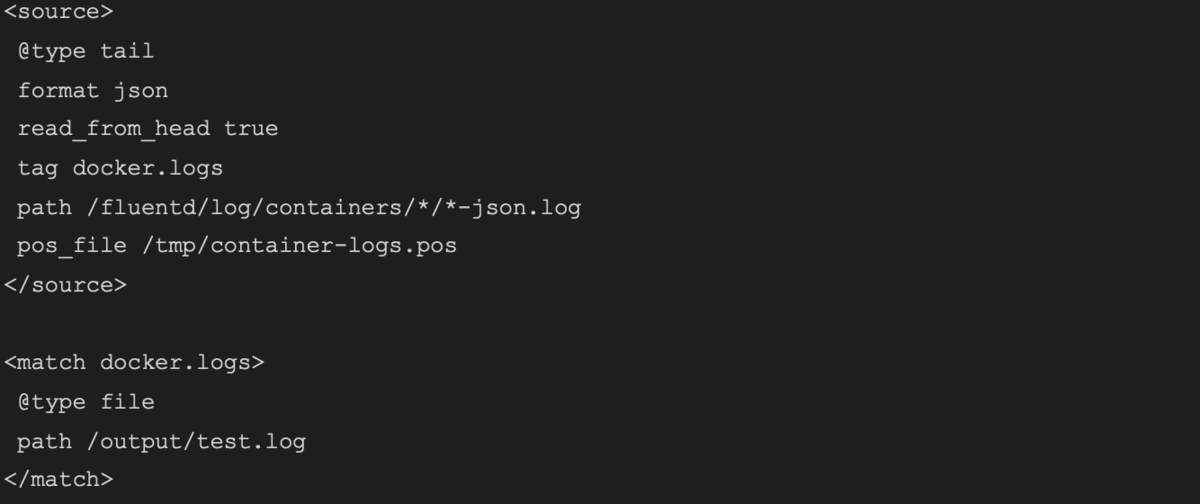

Docker-compose contains a volume mount from docker container into a directory inside the FluentD container, simple FluentD configuration file instructing FluentD how to collect logs.

Looking at the FluentD configuration file, it provides a source of where to gather the logs from, and where to output them. So FluentD will pick-up all /-json.log in the container, and save it to /output/test.log

For demo purposes, change directory to where docker-compose is. Run docker-compose up to start the FluentD container.

![]()

On another terminal, run docker run -it redis. After a while, you should see the logs folder having a buffer containing the logs from redis.

So that’s a basic introduction to standardized and centralized logging using FluentD.

Thank you.